PyCon 2025 Review

Exciting and vibrant PyCon experience

PyCon 2025 Review

I finally had the opportunity to participate in PyCon, which I’ve been wanting to attend for quite some time! Last year, it was held in Suwon, which was a bit far from where I live, so I couldn’t make it. But when I heard that this year’s event would take place at Dongguk University, close to my home, I immediately purchased my ticket. Let me share some thoughts about the impressive sessions I attended at this year’s PyCon and briefly reflect on my overall experience.

Awesome sock merchandise I bought at PyCon

Awesome sock merchandise I bought at PyCon

You can find information about PyCon presentations and speakers at the link below:

Day 1

Building Ethical LLM Solutions That Don’t Cross the Line

Image source: Sprout Social

This session really drove home the growing impact AI is having on business and society. It became clear that simply improving model performance and accuracy isn’t enough anymore – the key challenge lies in finding the right balance between security, ethics, and productivity. What particularly struck me was how crucial it will be for the AI industry to discover ways to maintain innovation and productivity while adhering to security requirements like data protection and regulatory compliance.

The discussion about the technical, organizational, and regulatory challenges facing Korea’s AI industry was fascinating. The limitations of global models that don’t adequately reflect Korean cultural and linguistic contexts, along with the shortage of AI and security specialists, represent significant hurdles that our domestic AI industry needs to overcome. I also gained concrete insights into how alignment technologies like RLHF, PPO, and DPO work to align models with human intentions and ethical standards. This reinforced my belief that AI needs to evolve beyond providing merely “smart answers” to delivering “correct and safe answers” – that’s where the real value lies.

AI Platform Journey with Python

This presentation showcased the solutions Karrot implemented to handle their explosive user growth and the corresponding surge in model pipeline demands. The talk was brilliantly structured into two parts: Model Training and Model Inference, with each section demonstrating a systematic approach that was truly impressive.

In the model training section, I was particularly struck by their efforts to address Python’s high flexibility, which can sometimes be a double-edged sword. They leveraged Kubeflow and TFX (TensorFlow Extended) to enhance pipeline reusability, and used protobuf to clearly define and manage training and pipeline parameters, effectively reducing data type ambiguity. Their use of the uv package manager combined with pyproject.toml to create a faster, more efficient training environment was also noteworthy.

The model inference section focused on optimization strategies for handling massive traffic loads. Their architecture built on Apache Beam and Dataflow, with meticulous optimization of network I/O and GPU memory usage to improve overall efficiency and stability, was genuinely fascinating.

As someone deeply interested in MLOps, this session was definitely one of the most impressive presentations at this year’s PyCon. I’m already looking forward to rewatching the video once it’s released to dive deeper into all the technical details!

Day 2

Weaving Your Own Legacy with Python (Park Hae-seon)

Image source: gitfiti repository

This session was presented by Park Hae-seon, who has been consistently writing and translating machine learning books and educational materials. I’ve personally learned a lot from his works like “Hands-On Machine Learning” and “Natural Language Processing with Transformers,” so it was incredibly exciting to meet him in person and hear his presentation firsthand.

The core message of his talk was “Build your own legacy in your development career.” What particularly resonated with me was his observation that “workplace tasks are unlikely to provide 100% motivation from within.” While our jobs can sometimes align perfectly with our interests and strengths, there’s no guarantee this will always be the case. That’s why it’s crucial to build our own “legacy” or “history” that can provide long-term career motivation. He suggested ways to create this legacy through community participation, open-source contributions, and speaking at conferences like PyCon.

I had already been thinking about presenting at the next PyCon while attending this one, but this session really amplified that desire. I realized that the process of building your own legacy through presentation preparation is valuable in itself. Just like how teachers often learn more than their students when preparing lessons, I’m convinced that preparing for a presentation will help me learn and grow tremendously. This talk provided me with powerful motivation and was truly meaningful for my personal development journey.

Making Development Enjoyable: More Focused Python Development with Generative AI and Containers

Image source: kiro dev blog

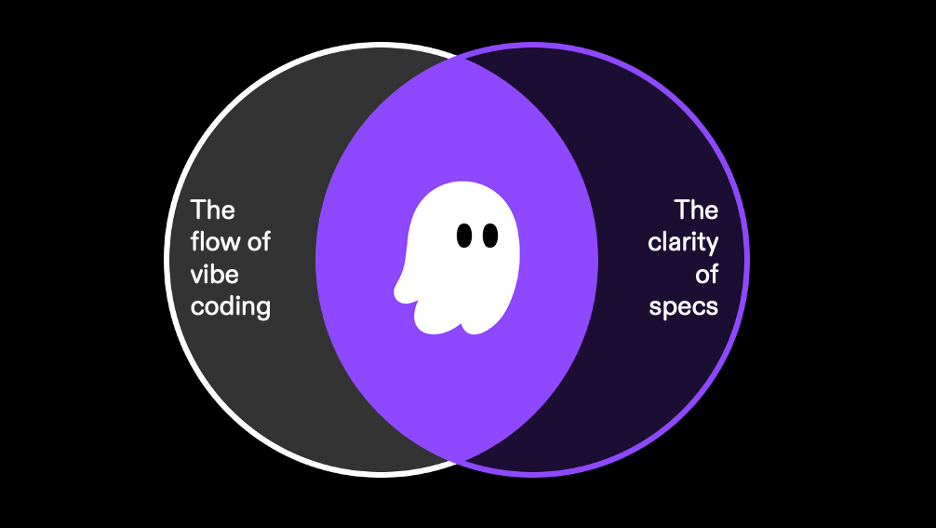

While this presentation had a strong promotional element for AWS’s development tool ‘Kiro,’ it went far beyond a simple product introduction and offered valuable insights into the future of development culture.

Most developers, myself included, typically jump straight into prompting when using AI tools like ChatGPT or Cursor for coding or debugging. While this approach offers speed advantages, it often leads to issues like ignoring team coding conventions or making unnecessary file modifications – essentially losing important code context.

Kiro’s approach was refreshingly different. When starting a new project, it begins by organizing the project scope, requirements, limitations, tech stack, and other details into a Tech Spec before diving into development. This entire process is automatically documented in markdown, maintaining a more systematic and consistent development environment.

Observing Kiro’s development process made me realize that documentation will become even more critical in future development culture. While documentation was already essential for smooth developer communication before the AI era, I now see that well-documented teams will be able to leverage AI tools more effectively and communicate more efficiently with AI systems.

I also recognized that the ability to precisely explain requirements has become a core skill when collaborating with AI. Not just developers, but also product managers and designers will need to develop clear documentation and communication skills – this will be key to enhancing collaboration efficiency in the future.